Data Labeling for Computer Vision

Boost your object detection, classification, and segmentation models with the highest quality datasets. Innovatiana offers tailor-made image annotation services for your artificial intelligence projects

Our experts in data annotation for AI combine thoroughness, technical mastery and knowledge of advanced tools to transform your image and video assets into usable data for Computer Vision models

Image annotation

Video annotation

3D point cloud annotation

Medical annotations

Image annotation

We transform your visual data into strategic resources thanks to human and technological expertise adapted to each sector.

Bounding Boxes

Annotating visual data with Bounding Boxes consists in precisely delineating the objects of interest in an image using rectangles, in order to allow a Computer Vision model to learn to detect or recognize them automatically.

Definition of the annotation plane and the classes of objects to be located

Manual or semi-automated annotation by bounding boxes (images, videos, satellite views, ...)

Cross validation and quality control (consistency of labels, overlaps, coverage rate, ...)

Export annotations to standard formats (COCO, YOLO, Pascal VOC, ...)

Industrial inspection — Detection of defects on parts in production

Autonomous driving — Tracking vehicles, pedestrians, traffic signs

Satellite imagery — Location of buildings, agricultural or forest areas

Polygons

The annotation by polygons allows you to precisely delineate the complex contours of objects in an image (irregular shapes, nested objects, etc.), essential for models of instance segmentation or semantics.

Definition of categories and segmentation criteria

Manually annotate objects by drawing polygons point by point

Quality control and cross-checking of contours and classes

Export in adapted formats (COCO, Mask R-CNN, PNG masks,...)

Industrial inspection — Precise detection of faulty areas

Autonomous driving — Segmentation of roads, sidewalks, vehicles

Satellite imagery — Delimitation of crops, buildings or natural areas

Keypoints

The annotation by keypoints consists in placing reference points on specific areas of an object (e.g. human joints, facial references, mechanical components) in order to train models of posture detection, pose estimation, motion tracking or fine recognition.

Definition of the skeleton of points (number, name, relationships between keypoints)

Manually placing keypoints on each frame or sequence

Quality control on spatial coherence and alignment errors

Export to JSON or COCO keypoints

Industrial inspection — Identification of precise components or sensors on machines

Autonomous driving — Monitoring the movements of pedestrians or cyclists

Health/sport — Analysis of posture, gestures or joint movements

Segmentation

The annotation by segmentation aims to assign a label to each pixel of an image to allow a model to accurately understand the contours and nature of objects, surfaces or areas. It is essential for tasks of semantic segmentation or Instance, used in the most advanced vision systems.

Definition of the classes to be segmented (objects, surfaces, materials,...)

Pixel by pixel annotation (manual or assisted)

Quality control by proofreading and harmonizing contours

Export to PNG mask, COCO segmentation, or vector files

Industrial inspection — Precise detection of areas with defects or wear

Autonomous driving — Fine segmentation of the road, sidewalks, vehicles

Satellite imagery — Classification of urban, natural or agricultural areas

Polylines

The annotation by polylines consists in drawing continuous lines on images to represent linear structures such as roads, contours of fine objects, cables or trajectories. It is especially useful in cases where objects are too thin or long to be annotated effectively by bounding box or polygon.

Define the categories of linear elements to be annotated

Manual or assisted drawing of polylines on images

Verification of the continuity, precision and consistency of the layout

Export in compatible formats (JSON, GeoJSON, adaptive COCO)

Industrial inspection — Annotation of cracks, welds, cabling

Autonomous driving — Tracing ground markings or vehicle trajectories

Satellite imagery — Delineation of roads, rivers or power lines

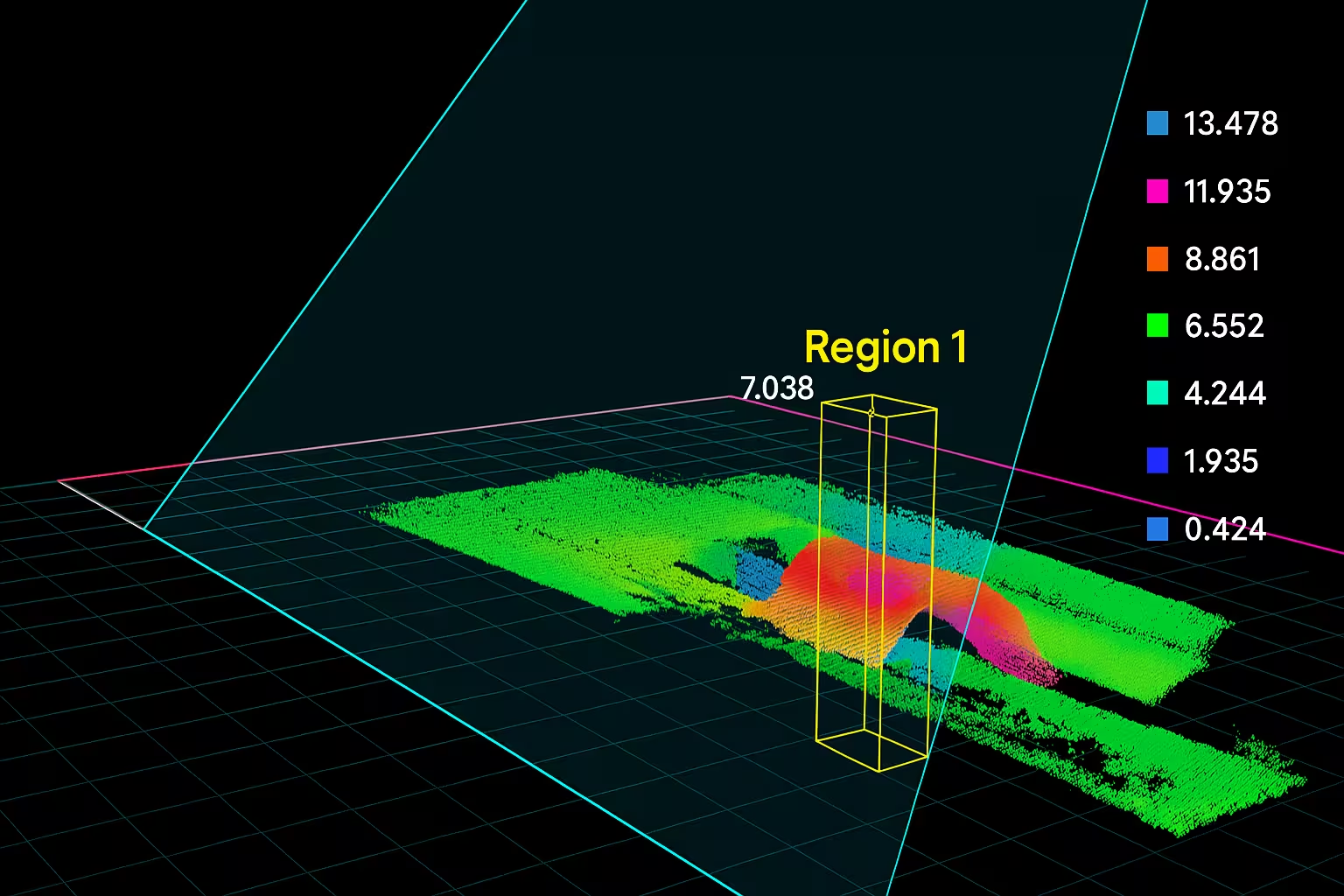

Cuboids

The annotation by cuboids allows you to delimit objects in space in three dimensions from 2D images, LiDAR data or video sequences. It is essential for training models of 3D perception in complex environments (autonomous driving, robotics, logistics, ...).

Definition of object classes to be modelled in 3D

Manual or semi-automated placement of cuboids across multiple views or point clouds

Alignment and verification of dimensions, orientation and depth

Export in compatible format (KITTI, PCD, 3D JSON,...)

Autonomous driving — Vehicle and pedestrian detection with distance and volume estimation

Logistics — Location and sizing of packages in warehouses

3D mapping — Annotation of buildings or structures in urban environments

Video annotation

We transform your videos into usable data for AI thanks to expert, accurate annotation adapted to your use cases.

Object Tracking

Object Tracking consists in following one or more objects of interest in a video sequence frame by frame, in order to model their Trajectory in time.

Selection of objects to follow (car, person, animal, product,...)

Manual or semi-automatic annotation of the position frame by frame (bounding box, polygon,...)

Consistent association of a unique identifier for each monitored object

Adjustment and interpolation of missing frames if necessary

Autonomous driving — Pedestrian and vehicle tracking in an urban environment

Retail — Analysis of the customer journey in stores to study buying behaviors

Sport — Player tracking for modeling performances or creating statistics in real time

Action Recognition

Annotate video clips containing specific gestures or behaviors (e.g. running, falling, lifting an object...), in order to train models capable of Automatically recognize actions in a video.

Definition of the catalog of actions to be detected (exhaustive list, by domain)

Detecting and selecting video segments where these actions occur

Time annotation with start/end of action + associated label

Structuring and exporting in compatible format (e.g.: JSON, CSV, frame range + label)

Sport — Recognition of technical gestures in video training (e.g.: dribbling, jumping, passing)

Security — Detection of suspicious actions (e.g.: fight, intrusion, object abandonment)

Health — Automatic detection of falls or unusual movements in a nursing home

Event Detection

Annotate key or unusual events occurring in a video, with a marked temporal dimension (start/end), without necessarily involving continuous action.

Identification of the types of events to be annotated (e.g.: collision, door opening, alarm triggered, etc.)

Temporal annotation of each event (timestamp or frame range) with the corresponding label

Verification and validation of occurrences to avoid false positives

Export annotations (e.g. CSV, JSON, with timestamp + event type)

E-learning — Automatic marking of key educational moments (e.g.: speaking, demonstration, moment of confusion)

Industry — Identification of production incidents (machine jamming, falling object, line stop)

security — Detection of intrusions, abnormal behaviors or unauthorized movements

Temporal classification

Assign global or contextual labels to continuous sequences of a video, by segmenting them according to coherent periods (e.g.: calm/activity/alert).

Definition of the temporal categories to be annotated (states, situations, activity levels,...)

Annotating time ranges with a single label per segment

Review and check the consistency between the transitions

Export annotated segments with start/end + associated class (formats: JSON, CSV, XML,...)

Behavioral studies — Identifying the phases: sustained attention/distraction/fatigue

Circulation — Sequence classification: fluid/dense/blocked

Monitoring — Segmentation of periods: active/inactive/system error

Pose Estimation

Annotate the body positions (keypoints) frame by frame in a video sequence, in order to model the movements of one or more individuals over time.

Definition of the keypoint skeleton (e.g.: 17 points — head, shoulders, elbows, knees,...)

Annotation of key points on each frame or by keyframes with interpolation

Manual review and correction in case of occlusion or ambiguity

Export in specialized formats (COCO keypoints, structured JSON, CSV per frame)

Sport — Study of the technical gesture (throwing, jumping, typing...) in video training

Oversight — Detection of suspicious attitudes or motor anomalies

Health/Rehabilitation — Analysis of posture and joint amplitudes

Interpolation

Automatically generate missing annotations between several key frames (Keyframes) in a video. This technique is used for speed up manual annotation, while maintaining sufficient precision for training AI models. This method is applicable to various types of annotations: bounding boxes, polygons, keypoints, etc.

Manual annotation of objects or points on key frames (all X frames)

Activation of automatic interpolation in the annotation tool (CVAT, Label Studio, Encord,...)

Verification of the interpolations generated: trajectories, shapes, coherence

Manual adjustment of frames where interpolation is incorrect

Logistics robotics — Fluid animation of moving objects between two positions

Embedded videos — Seamless tracking of vehicles or pedestrians without annotating each frame

Multimedia production — Accelerated annotation of long sequences for segmentation or tracking

3D point cloud annotation

We structure your point clouds into usable 3D data thanks to expert annotation adapted to your AI models

Dot labeling

Annotate each point with a 3D point cloud with a specific class (e.g.: ground, vehicle, vehicle, pedestrian, vegetation,...). This method is used to train models of 3D semantic segmentation, used in robotics, autonomous driving or cartography.

Loading the raw point cloud (LiDAR data, photogrammetry, etc.)

Selection of classes to apply (e.g.: road, sidewalk, building, tree, car,...)

Manual or assisted annotation of each point or groups of points (via 3D selection, brushes, volumes)

Export annotated data in a compatible format (e.g., .las, .pcd, .json)

Autonomous driving — Precise segmentation of road elements in urban or highway scenes

HD mapping — Fine point classification to generate structured 3D maps

Industrial robotics — Identification of objects or obstacles in a 3D environment for autonomous navigation

Meshes

Label 3D surfaces composed of connected triangles or polygons, often derived from LiDAR or photogrammetric scans. For a more precise segmentation of shapes and volumes that the annotations on points alone, by capturing the real topology of objects.

Identifying objects to be annotated

Precise framing of objects

Category labeling

Validating annotations

Medicine — Annotation of anatomical surfaces (bones, organs) on 3D models from MRI or scanners

AR/VR/3D Modeling — Labeling 3D object components for physical interactions or simulations

Architecture/Construction — Identification of materials or structures on 3D building models

Point clouds

Identify, segment, or classify objects in a three-dimensional space captured via LiDAR or photogrammetry. It can take the form of 3D cuboids, segmented areas, or dot labeling, and makes it possible to train perception models in a real environment.

Loading raw data (e.g.: .las, .pcd, .bin, .json) in a dedicated 3D tool

Point cloud visualization with spatial navigation tools (rotation, zoom, selection)

Annotation by volume (cuboid), free selection (lasso, brush), or point by point

Assigning classes to each object or segment (vehicle, pedestrian, tree, facade, etc.)

Autonomous vehicles — 3D detection of objects and areas in complex scenes

Robotics — Identifying obstacles, target objects or navigation areas in a 3D space

Smart city/mapping — Structuring urban elements based on aerial or mobile scans

Flat surfaces

Flat surfaces in Data Labeling refer to an area in the point cloud where data has a regular distribution and is aligned on the same geometric plane.

Loading the 3D point cloud into a compatible visualization tool

Manual annotation by selecting flat areas

Attribution of a label to each detected shot

Exporting surfaces with metadata (ID plan, label, orientation, coordinates)

Indoor scan — Automatic identification of floors, walls and ceilings for BIM modeling or augmented reality

3D mapping — Detection of facades, roofs or other architectural elements in urban scenes

Mobile robotics — Identification of navigable surfaces (flat soils) for trajectory planning

3D objects

identify and delineate complete entities in a point cloud, by associating them with a label (e.g.: car, pedestrian, tree, etc.). This annotation can be done using 3D cuboids, of manual selections or assisted segmentation algorithms.

Point cloud loading (terrestrial, mobile or aerial LiDAR)

Annotate each detected object with an identifier and a class

Checking the contours, orientation, and completeness of objects

Export annotations in compatible format

Environment — Tree tracking and counting in aerial LiDAR surveys

Industry — Identification of objects or equipment in 3D scans of factories or warehouses

Autonomous driving — 3D detection and tracking of vehicles, pedestrians, cyclists in the road environment

3D Object Tracking

Identify the same object in a point cloud through several successive frames, by giving it a unique persistent identifier. This annotation makes it possible to train models capable of Follow moving objects in 3D space.

Initial annotation of the objects in each frame (e.g. via cuboids or segmentation)

Assigning a unique ID per object to link it across frames

Manual or semi-automatic tracking of the position and dimensions of the object over time

Data export with time identifiers (frame, object ID, 3D position, class)

Autonomous vehicles — Continuous monitoring of pedestrians, cars, two-wheelers in a LiDAR flow

Logistics robotics — Tracking packages or objects handled in a warehouse

security — 3D tracking of people or machines in monitored environments

Medical annotations

We transform your medical images into reliable data thanks to expert, rigorous annotation that meets clinical requirements.

Bounding Boxes

Delineate areas of interest (e.g. anomalies, organs, medical devices) on 2D images from examinations such as X-rays, MRIs or ultrasounds. This fast and structured method makes it possible to train models of automatic detection in clinical contexts.

Loading medical images (DICOM format, PNG, JPEG, etc.) into a compatible annotation tool

Selection of classes to be annotated (lesion, tumor, tumor, implant, bone, etc.)

Manual annotation of regions of interest using rectangles (bounding boxes)

Export annotations in standard format (e.g., COCO, Pascal VOC, YOLO)

Radiology — Detection of fractures or implants on x-rays

Pulmonology — Identification of nodules or opacities on chest x-rays

Neurology — Annotation of suspicious masses on brain MRI sections

Polygons

Precisely delineate the contours organs, lesions, or implants on 2D medical images. Unlike bounding boxes, it offers better precision for structures irregular or complex, essential for fine segmentation in medical imaging.

Importing medical images (x-ray, MRI, ultrasound, etc.)

Definition of anatomical or pathological classes to be segmented

Manual annotation of contours using point-to-point polygons (or free drawing tools)

Export in mask format (PNG), COCO segmentation, or custom formats for segmentation

Oncology — Segmentation of lung nodules or masses on CT scans

Orthopaedics — Contour of joints or bone areas on x-rays

Neurology — Precise delineation of tumors or brain areas on MRI

Segmentation into masks

Assign a Label each pixel of a medical image in order to precisely delineate an anatomical structure or anomaly. To train models of semantic or instance segmentation, especially in tasks requiring a detailed understanding of shapes and volumes.

Importing medical images (DICOM, PNG, NiFTI, etc.) into a segmentation tool

Definition of the classes to be segmented (organ, lesion, prosthesis,...)

Manual or semi-automatic annotation pixel by pixel or by zone (brush, active contour, assisted by AI? etc.)

Export in the form of binary or multi-channel masks (PNG, NiFTI, COCO RLE, etc.)

Neuro-imaging — Segmentation of ventricles, tumors or functional regions of the brain

Oncology — Fine delineation of tumors for radiotherapy or progression monitoring

Musculoskeletal imaging — Segmentation of bone or joint structures on MRI or CT

3D areas (Volume Annotation)

Delineate regions of interest within a medical volume (MRI, CT, etc.), by annotating Voxel by voxel or by interpolated segmentations across the sections.

Loading the 3D volume (DICOM, NiFTI formats, etc.) into a medical visualization software

Selecting the structure to be annotated (e.g. tumor, organ, cavity)

Manual or semi-automated annotation on the various sections (axial, coronal, sagittal)

Export the segmented 3D mask in a compatible format (NiFTI, MHD, volumetric PNGs,...)

Radiotherapy — Outline of organs at risk and target volumes for dose calculation

Neurosurgery — Volume delineation of brain tumors for operative planning

Clinical research — Annotation of whole organs (liver, kidneys, heart,...) for training 3D segmentation models

Contours and curves

Precisely trace the boundaries of anatomical structures or pathological on 2D medical images, by manually or semi-automatically following the natural lines of an organ, a lesion or an implant.

Importing medical images (MRI, x-rays, ultrasound,...)

Activating a free or curved plot tool with an annotation platform

Closing the curve and validating the precision of the plot

Export in vector or rasterized format (SVG, JSON, PNG mask,...)

Neurology — Annotation of the boundaries of functional brain areas on anatomical MRI

Cardiology — Delimitation of the myocardial wall or heart chambers on functional MRI

Orthopaedics — Tracing joint lines or cracks on x-rays

Landmarks

Place precise points on anatomical landmarks (Landmarks) to analyze the structure, symmetry, or alignment of a given area. It is used in tasks such as morphometry, theimage alignment, or as a support for other types of annotations (segmentation, measurements, monitoring).

Loading the medical image (MRI, CT, X-ray, etc.) into an annotation tool

Manual positioning of points on specific structures (e.g.: apex of the heart, femoral condyle, commissures)

Checking the consistency of positions and distances

Export of coordinates (CSV, JSON, XML, or proprietary format depending on the tool)

Dentistry — Positioning of cranial landmarks on cephalograms for orthodontic analysis

Orthopaedics — Annotation of alignment points on x-rays for planning implants or prostheses

Neurosurgery — Marking of anatomical landmarks for the alignment of preoperative MRIs

Use cases

Our expertise covers a wide range of AI use cases, regardless of the domain or the complexity of the data. Here are a few examples:

Why choose

Innovatiana?

We mobilize a flexible and experienced team of experts, mastering the annotation of images and videos via manual, automatic and hybrid approaches. We produce visual datasets adapted to all computer vision use cases

Our method

A team of professional Data Labelers & AI Trainers, led by experts, to create and maintain quality data sets for your AI projects (creation of custom datasets to train, test and validate your Machine Learning, Deep Learning or NLP models)

We offer you tailor-made support taking into account your constraints and deadlines. We offer advice on your certification process and infrastructure, the number of professionals required according to your needs or the nature of the annotations to be preferred.

Within 48 hours, we assess your needs and carry out a test if necessary, in order to offer you a contract adapted to your challenges. We do not lock down the service: no monthly subscription, no commitment. We charge per project!

We mobilize a team of Data Labelers or AI Trainers. This team is managed by one of our Data Labeling Managers: your privileged contact.

You are testifying

🤝 Ethics is the cornerstone of our values

Many data labeling companies operate with questionable practices in low-income countries. We offer an ethical and impacting alternative.

Stable and fair jobs, with total transparency on where the data comes from

A team of trained Data Labelers who are fairly paid and supported in their professional growth

Flexible pricing by dataset or project, with no hidden costs

Economic development in Madagascar (and other countries) through training and local investment

Maximum protection of your sensitive data according to the best standards

Acceleration of global ethical and responsible Artificial Intelligence

🔍 AI starts with data

Before training your AI, the real workload is to design the right dataset. Find out below how to build a robust POC by aligning quality data, adapted model architecture, and optimized computing resources.

Feed your AI models with high-quality, expertly crafted training data!

.webp)