How Drone Data Annotation Boosts AI Accuracy by 85%

💡 How Top Companies Use Drone Data Annotation to Improve Accuracy by 85%

What happens when you combine high-resolution aerial imagery and high resolution images with precise data labeling? Industries discover new ways to extract actionable insights that were previously impossible to obtain. Modern drones capture real-time footage that powers AI models, automating complex analysis tasks that once demanded extensive manual work. Machine learning and neural networks, powered by artificial intelligence, now automate the analysis and processing of complex data, often outperforming human capabilities in detecting and labeling specific objects.

At the backend of drone Research & Development, the reach of drone data annotation services extends across numerous industries today. Urban planners, agricultural specialists, environmental researchers, and emergency responders all rely on aerial imagery that delivers critical information with remarkable detail. Consider this: drones capture thousands of frames every hour, making real-time annotation essential rather than optional for time-sensitive operations like search-and-rescue missions, wildfire monitoring, and security surveillance.

Quality drone data serves purposes far beyond simple image collection. Proper annotation creates labeled datasets that teach AI models to recognize objects and understand environments, allowing drones to interpret and respond to various objects, actions, and scenarios accurately. Rich context in these annotations provides relevant situational information, enhancing model accuracy and decision-making in complex environments. Field workers experience less stress and greater efficiency when using high-resolution drone imagery for mapping and monitoring tasks.

👉 This article examines how the industry leaders construct annotation pipelines, deploy innovative applications, address technical challenges, and follow proven practices to achieve substantial accuracy gains in their drone operations.

Introduction to Drone Technology

Drone technology has rapidly transformed the landscape of data collection and analysis across a wide range of industries. Unmanned aerial vehicles (UAVs) now play a key role in environmental monitoring, disaster management, and precision agriculture by delivering high-resolution aerial imagery and comprehensive sensor data from dynamic environments. The ability of drones to access hard-to-reach areas and capture detailed information in real time has unlocked new opportunities for organizations seeking valuable insights into their operations and surroundings.

However, the true value of drone-collected data is realized only when it is accurately interpreted and analyzed. This is where data annotation becomes indispensable. By applying advanced annotation techniques (such as object detection and semantic segmentation) organizations can transform raw aerial imagery and sensor data into structured, annotated training data. This annotated data is essential for training machine learning models and computer vision algorithms, enabling them to recognize objects, classify land features, and detect changes within complex environments.

Data annotation tools streamline this process by providing intuitive interfaces and automation features that facilitate precise labeling of large datasets. These tools support a variety of annotation techniques, ensuring that the resulting training data meets the rigorous standards required for effective model training. As a result, industries ranging from agriculture to emergency response can leverage drone technology and annotated data to drive smarter decision-making, improve operational efficiency, and respond proactively to emerging challenges.

How Top Companies Build Real-Time Annotation Pipelines

Companies today face a critical challenge: processing massive data streams from modern drone systems in real-time. The solution lies in sophisticated annotation pipelines that represent a major shift in how aerial data gets captured, processed, and analyzed. Accurate annotation at different altitudes is critical, as it ensures effective data diversity (and drone operation) and enhances object recognition across various flight levels.

To address the growing demands of drone data annotation, companies are increasingly implementing scalable solutions. These scalable solutions enable efficient management and expansion of annotation capacity, supporting continuous updates and rapid processing as data volumes and complexity increase. See below some examples.

Drone-camera integration and data capture

What makes a drone system truly powerful? Often referred to as an unmanned aerial vehicle (UAV), a drone system's strength lies in sensor fusion. Top-tier drones combine high-resolution RGB cameras, thermal cameras, LiDAR scanners, and multispectral sensors. This multi-modal approach gives drones unprecedented perception capabilities while operating in three-dimensional space. Take the DJI Matrice 300 RTK: it supports software development kits that provide direct access to live streams, making immediate data processing possible.

Onboard preprocessing to reduce noise

Smart companies don't wait until data reaches the ground to start processing. They implement preprocessing stages directly on the drone itself. This initial filtering eliminates irrelevant or redundant data, cutting the overall processing load significantly. Onboard systems clean and format raw sensor inputs, guaranteeing both accuracy and relevance before transmission. Lightweight models like YOLO-Nano or MobileNet SSD perform preliminary detections, creating a first-pass filter that identifies the most important frames.

Edge and cloud-based inference models

The best approach combines edge and cloud processing rather than choosing one over the other. Edge computing enables drones to analyze data locally, sometimes within milliseconds, boosting decision-making speed by up to 65%. Cloud servers handle the heavier computational work and model updates. This integrated edge-cloud framework turns UAVs into edge nodes for data collection while maintaining collaboration with ground cloud servers through wireless channels. Advanced systems deploy AI-driven inference engines to detect objects, identify anomalies, and generate geotagged alerts without overloading the drone’s limited computing resources. Drone AI leverages annotated visual data, such as images and videos, to enhance detection, navigation, and security functions through advanced computer vision and training techniques.

Smart sampling and frame buffering

Here's a key insight: not every frame needs annotation, especially in high-frame-rate drone footage. Smart sampling techniques examine one frame every few seconds unless motion appears or anomalies emerge. Frame buffer methods have demonstrated a 14.29% improvement over baseline approaches when handling complex scene textures common in UAV imagery. Event-based triggers - like changes in object count or anomaly detection - dynamically decide which frames get annotation priority. This selective strategy preserves battery power while maintaining annotation quality for the most critical data points.

Tools and Techniques for Drone Data Annotation

The effectiveness of drone-based analytics hinges on the quality and consistency of annotated data. To achieve this, organizations rely on a suite of data annotation tools and techniques tailored to the unique challenges of aerial imagery. Common approaches include box annotation for object detection, polygon annotation for outlining irregular shapes, and semantic segmentation for pixel-level classification of terrain and features. These annotation techniques enable machine learning models to accurately detect objects, monitor changes, and classify elements within complex aerial datasets.

Modern data annotation tools are designed to handle large volumes of collected data efficiently, supporting a wide range of file formats and annotation methods. These platforms often incorporate features for precise labeling, quality control, and seamless integration with machine learning workflows. Annotation services further enhance this process by providing dedicated teams of skilled annotators who ensure data integrity and consistency across diverse datasets. Their domain expertise is especially valuable when dealing with edge cases or specialized environments, such as wildlife monitoring or urban planning.

In addition to manual annotation, advanced tools leverage object tracking and machine learning algorithms to automate repetitive tasks and accelerate the creation of annotated datasets. This combination of human expertise and intelligent automation allows organizations to scale their annotation efforts, maintain high standards of accuracy, and extract actionable insights from aerial data. Whether supporting public safety initiatives, mapping urban growth, or monitoring wildlife populations, these tools and techniques empower organizations to fully harness the potential of drone technology in various industries.

8 Ways Companies Use Drone Data Annotation to Improve Accuracy

Organizations worldwide have discovered that precise visual data labeling unlocks critical insights from aerial imagery. A data annotation tool streamlines the process of labeling and annotating images, videos, and other data types, enabling efficient and accurate preparation of data for AI model development. Each application demonstrates how strategic annotation approaches deliver measurable accuracy improvements across diverse operational contexts. High-quality training datasets, created through accurate annotation, are essential for effective model training and improved performance in aerial and drone applications.

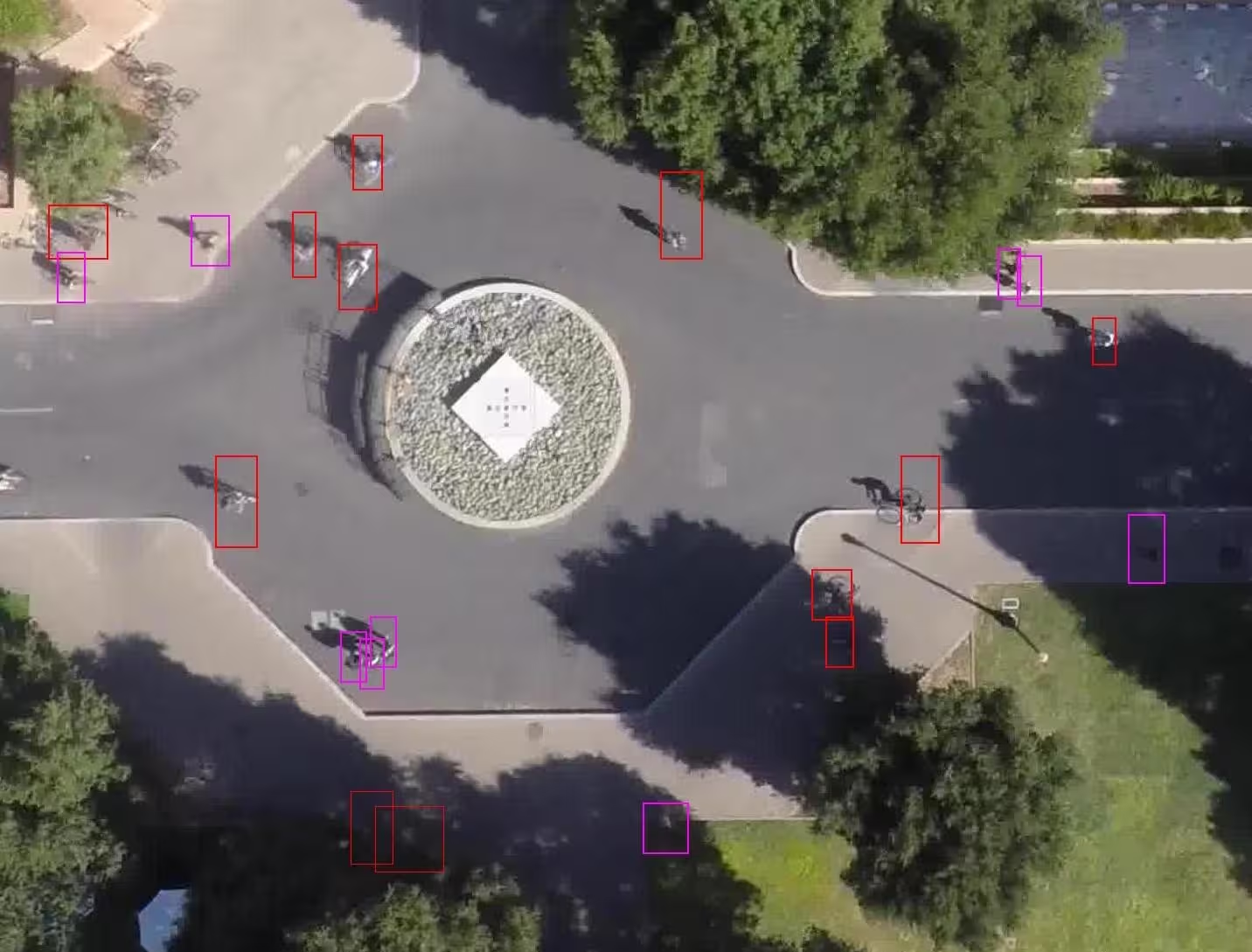

1. Detecting and tracking moving objects in real time

Computer vision models now identify and follow objects in motion, often relying on video annotation to accurately label aerial footage for object detection and tracking in these scenarios, adapting to variable lighting conditions and physical obstacles. For instance, the VisDrone dataset, containing 288 video clips with 261,908 frames and 10,209 static images, provides training material for object tracking algorithms that help drones detect pedestrians, vehicles, bicycles, and other moving targets.

2. Segmenting terrain for agriculture and land use

Semantic segmentation enables pixel-level precision when identifying different land types. Drone data annotation also supports crop monitoring for precision agriculture, enabling more accurate analysis and management of fields.

High-resolution drone photography allows AI models to distinguish farmland from buildings and natural features, creating valuable datasets for agricultural planning and environmental management. Tailored annotation schemas help detect crop diseases early, supporting both agricultural planning and effective disease management.

3. Monitoring construction progress and safety compliance

Construction teams rely on annotated drone imagery to track project timelines and verify safety standards. Tools like PIX4Dcloud help companies compare volumes over time, analyze excavation profiles, and create customizable reports that improve teamwork and project quality by up to 50%.

4. Identifying anomalies in infrastructure inspections

High-precision sensors and NDT probes detect material defects and degradation before they become critical issues. Terrain analysis enhances the interpretation of aerial data for accurate object detection and safety assessments in infrastructure inspections. These systems identify concrete cracks, spalling, and corrosion in bridges, power lines, and pipelines, making preventative maintenance both practical and cost-effective.

5. Enhancing perimeter security and surveillance

Security teams deploy drones capable of completing perimeter sweeps in under two minutes, covering large areas far more efficiently than ground-based personnel. Drone data annotation can also be used to monitor and analyze traffic patterns, helping improve security and urban management by identifying unusual vehicle flow or congestion near sensitive areas. These systems reduce response time from alarm triggers to first video feed to under two minutes, sometimes eliminating intrusions entirely once drone patrols become active.

6. Mapping disaster zones for emergency response

AI tools like CLARKE process drone footage to evaluate structural damage in minutes rather than days. Minimizing false positives - pixels mistakenly classified as coastline - is crucial for accurate disaster zone mapping and effective emergency response. This technology assesses neighborhoods of 2,000 homes in just seven minutes, dramatically accelerating response times for emergency teams.

7. Tracking wildlife and environmental changes

Researchers deploy drones with thermal cameras to monitor animal populations without disrupting natural behavior patterns. Drone data annotation also helps identify and monitor water bodies, which is essential for tracking environmental changes and understanding habitat dynamics. These systems count animals, observe movement patterns, and track environmental changes with minimal human interference.

8. Supporting military reconnaissance and tactical planning

Military applications utilize drone footage to automatically detect civilian and military personnel in real-time operations. Data collected from other drones can be integrated to improve object detection and situational awareness in military operations. RGB cameras paired with neural networks increase situational awareness and help identify military capabilities to enhance operational success.

Overcoming Challenges in Drone Data Annotation

Technical progress in drone annotation doesn’t come without significant obstacles. New challenges continually arise from evolving operational requirements and rapid technological advancements, requiring constant adaptation. Data processing demands create the biggest challenge when drones capture HD or 4K video at 30+ frames per second. Bandwidth bottlenecks emerge quickly, particularly in rural environments where connectivity remains limited.

Managing high frame rates and data volume

Massive datasets demand intelligent filtering approaches. High-resolution drone footage builds up rapidly-projects routinely involve 100,000+ frames or 55+ hours of video. Successful annotation pipelines use frame skipping, scene change detection, and event-based triggers to isolate only the most relevant frames. This targeted method protects both computational resources and annotation quality.

Dealing with environmental variability

Drone footage presents inherent complications, as understanding the environment is crucial for accurate annotation. Shadows, sun glare, camera shakes, and poor lighting conditions create messy visual data. Infrared footage adds another layer of complexity with varying thermal signatures and opacity issues. Drones work in unpredictable conditions, which means annotation systems must adjust to constantly changing visual environments while maintaining accuracy standards.

Balancing automation with human validation

Automation speeds up annotation processes, but complete dependence on AI-generated labels introduces risks of bias and errors. Annotation systems without proper human oversight miss rare objects, struggle with edge cases, and fail to confirm intent-creating problematic gaps in model training. Effective solutions combine AI labeling with human verification to ensure quality control. Manually annotated data is essential for producing high-quality ground truth labels, as it involves experts carefully marking and inspecting images to achieve precise and reliable results.

Hardware limitations at the edge

Edge computing constraints create serious bottlenecks. Drones allocate less than 5% of their power budget for computing tasks, which severely restricts onboard processing capabilities. Most commercial aerial drones operate for only 20-30 minutes per flight, making energy efficiency critical for annotation operations.

Best Practices for Accuracy and Scalability

Smart companies know that optimizing drone data annotation workflows requires strategic methodologies that balance automation with human oversight. These proven approaches boost both accuracy and scalability across diverse operational environments. A dedicated team of skilled annotators plays a crucial role in ensuring high-quality drone data annotation, delivering precise and reliable training data for aerial imagery applications.

Use of weak supervision and confidence scoring

Forward-thinking organizations deploy weak supervision layers that cut annotation requirements dramatically. Teams assign labels to entire subclouds or image groups rather than tackling labor-intensive pixel-level labeling. This method combines ensemble model voting, historical comparisons, and contextual rules to create preliminary annotations. Confidence scoring mechanisms route uncertain predictions to human reviewers automatically while high-confidence identifications pass through unchecked. Effective annotation tools and processes rely on key features such as precision, scalability, and automation to ensure accurate and efficient results. Research demonstrates that adding just 1% of full supervision alongside weak labeling produces significant model performance gains.

Incremental annotation with object tracking

Advanced object trackers like Deep SORT, FairMOT, and BoT-SORT allow annotations to persist across multiple frames, eliminating redundant labeling work. This incremental method focuses human attention solely on new objects or changing instances. Semi-automatic bounding box annotation methods reduce human workload by 82-96% in single-object tracking scenarios. Bounding boxes are widely used for object detection and localization in drone footage, enabling efficient identification and annotation of targets across aerial imagery. SiamMask and similar tracking algorithms extract target contours even when objects rotate or deform, preserving annotation accuracy throughout drone footage.

Annotation interoperability and standard formats

Top-performing drone data annotation services make format compatibility a priority. Teams utilize standard formats including COCO JSON, YOLO TXT, and Pascal VOC XML to ensure annotations work immediately across different systems. This standardization prevents time loss from data cleaning or format conversion between incompatible systems. Bounding box annotation remains the dominant method for object detection in specialized drone datasets like VisDrone, UAVDT, and AI-TOD.

💡 Developing a new structure in neural network architectures can further enhance segmentation accuracy in drone data annotation.

Continuous model retraining with feedback loops

Machine learning models degrade over time due to environmental changes and shifting data distributions. Smart companies counter this with continuous training systems that adapt models to new conditions automatically. These systems include:

- User-flagged false detections routed to retraining datasets

- Periodic fine-tuning using newly labeled data

- Monitoring tools that detect accuracy drops and trigger retraining

- Clear labeling guidelines that ensure annotation consistency

- Incorporation of satellite imagery to improve model robustness and accuracy, especially for tasks like coastline detection and monitoring natural changes

Versioned training queues enable teams to retrain models nightly or weekly, helping systems adapt to new environments, lighting conditions, or object types without manual intervention.

Conclusion

Drone data annotation has established itself as a critical technology that delivers measurable results across industries. Companies implementing advanced annotation pipelines gain substantial competitive advantages through faster decision-making and higher-quality data processing. The balance between edge computing and cloud processing creates robust systems that handle massive data volumes while maintaining precision.

Eight distinct application areas showcase the versatility of this technology. Agricultural monitoring, construction oversight, disaster response, and military reconnaissance all benefit from automated object detection, terrain segmentation, and real-time tracking capabilities. These applications demonstrate how proper annotation transforms raw aerial footage into actionable intelligence.

Technical obstacles persist, yet they are not insurmountable. Data volume challenges, environmental variability, and hardware constraints require strategic solutions. Companies that embrace weak supervision techniques, implement incremental annotation workflows, standardize data formats, and maintain continuous model retraining achieve the accuracy improvements detailed throughout this analysis.

The opportunity is clear for organizations ready to act. Hardware capabilities continue advancing while annotation algorithms become more sophisticated. Companies that invest in these technologies today position themselves as industry leaders tomorrow.

Drone data annotation represents a fundamental shift in aerial intelligence gathering. Organizations can now process visual information at scales and speeds that were impossible just years ago. The question is not whether this technology will reshape industries - it already has. The question is whether your organization will lead this change or follow it.