Data Augmentation: solutions to the lack of data in AI

To obtain efficient models for your AI/Machine Learning/Deep Learning developments, the quality and quantity of available data are decisive factors. However, in some situations, access to data sets may be limited. This can hinder the algorithm training process and compromise the performance of each deep learning model.

💡 Want to learn about data annotation first? Start here!

It is to solve this problem that the Data Augmentation technique was invented. There are two main advantages to this approach. First of all, it makes it possible to increase the size of the data set. Second, it helps to diversify its composition, thus improving the model's ability to generalize and respond to a variety of use cases. This article aims to provide detailed explanations and instructions for implementing Data Augmentation techniques.

How does data augmentation work?

The process of creating this augmented data generally takes place in several steps (that often require human involvement for review and qualification):

1. Data selection

First, it is necessary to select the data set on which to apply the data augmentation mechanisms.

2. Definition of transformations

3. Applying transformations

4. Integrating with the data set

The new data generated is then integrated into the existing data set in order to increase its size and diversity. Data Augmentation is generally applied only to the training set, in order to avoid overfitting the model to the training data.

What data format is affected by this method?

Data augmentation can be applied in various fields and to a wide variety of data formats, including:

Imagery

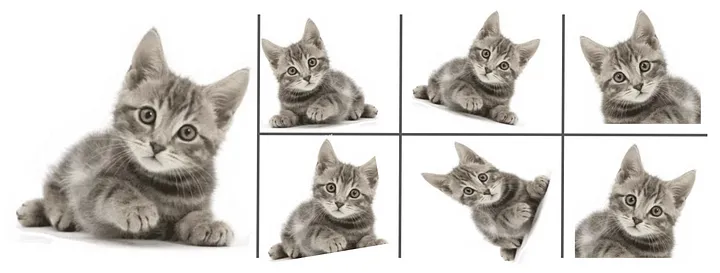

In the field of “Computer Vision”, a data set in the form of a photo can benefit from Data Augmentation techniques. This includes:

· medical images for the detection of diseases;

· satellite images for mapping;

· vehicle images for traffic sign recognition.

Audio

Data Augmentation also concerns applications such as speech recognition or the detection of sound events. It can be used to generate variations in frequency, intensity, or sound environment.

Text

Time Series

Sequential data, such as financial or weather time series, can also benefit from Data Augmentation. By increasing these data, we can in fact produce variations in trends, seasons, or patterns of variation. This can help each machine learning/deep learning model better capture the complexity of real data.

What are the possible transformations?

Data Augmentation offers a varied range of transformations depending on the type of dataset and the requirements of the task.

For the images

To create new variations, the following transformations are applicable to the images:

· rotation;

· cropping

· the change in brightness;

· the zoom.

For text

For text, the following are techniques that can be used to generate additional examples:

· paraphrase

· word replacement;

· adding or deleting words

For audio files

In speech recognition, here are the transformations that can simulate different acoustic conditions:

· The gear change;

· Tone variation;

· the addition of noise.

Finally, for the tabular

In tabular data, common transformation options are:

· Disturbance of numerical values;

· One-Hot encoding for categorical variables;

· Generation of synthetic data by interpolation or extrapolation.

💡 It is important to know choose appropriate transformations to maintain the relevance and meaning of the data. An inappropriate application may compromise the data quality and result in poor performance of the Machine Learning or Deep Learning model.

A perspective: history of neural networks and data augmentation

The history of neural networks dates back to the beginnings of artificial intelligence, with attempts to model the human brain. The first experiments were limited by the available computing power. Thanks to the technological advances of the last decade and in particular to Deep Learning, neural networks have experienced a revival.

Current data preparation methods, and in particular Data Augmentation, have become a pillar of this renewal, imitating the neuroplasticity by enriching training data sets with controlled variations. This relationship between the history of neural networks and Data Augmentation reflects the evolution of machine learning.

It allows modern networks to learn from larger and more diverse datasets. By integrating the history of the neural network into the current data augmentation method, it becomes easier to understand the evolution of artificial intelligence and the current challenges in collecting and processing data.

A quick reminder: how does a neural network work?

An artificial neural network works according to principles inspired by the functioning of the human brain. Composed of several layers of interconnected neurons, each neuron acts as an elementary processing unit. Information flows through these neurons in the form of electrical signals, with weights associated with each connection that determine their importance.

During learning, these weights are adjusted iteratively to optimize network performance on a specific task. With each repetition, the network receives training examples and adjusts its weights to minimize a defined cost function.

During training, data is presented to the network in batches. Each lot is propagated across the network. And the model's predictions are compared to the actual labels to calculate the error. Using backpropagation and gradient descent optimization, the weights are adjusted to reduce this error.

Once trained, the network can be used to make predictions about new data by simply applying the computational operations learned during training.

Is that too much for you? No, it's time to learn deep learning with DataScientest!

The courses combine theoretical presentations and practical exercises. Learners benefit from access to high-quality resources, including an explanatory video, practical tutorial, and project. Supervised by experienced trainers, they are guided throughout their learning journey.

By taking these courses, learners develop essential skills in Deep Learning. Also, they are staying up to date with the latest technological advances and preparing to meet the challenges of AI.

Keep up to date with the latest advances in Data Science and Artificial Intelligence!

-hand-innv.png)